Using custom AI models with IWL

I Write Like uses AI models to help you edit and rewrite your texts. It can correct grammar, shorten, expand, or paraphrase text; reformat it; or convert it into a list or table. It also supports custom prompts so that you can tell the AI exactly what you want it to do.

IWL supports OpenAI and Google Gemini models via their API, and you need to provide your own API keys.

In the latest update, we’ve added support for custom AI models, including local models that you can run on your computer. This makes it possible to use any model you like, provided that it’s served via the OpenAI-compatible API.

You can use a local AI server, such as Ollama, to download and run local models, and configure I Write Like to use it. This way, you can run state-of-the-art models, such as Meta’s Llama-3, Microsoft’s Phi-3, Google’s Gemma, and many others for free and privately.

How to configure Ollama with I Write Like

In this guide, we’ll show you how to install and configure Ollama with the Phi-3 model and use it with I Write Like. We’ll do it on macOS, but the setup is comparable on Windows and Linux.

1. Download and install Ollama

You can get it from their website or, if you’re on a Mac, and have the homebrew package manager installed, type this in the Terminal:

brew install ollama

2. Launch Ollama

Open Terminal and type:

OLLAMA_ORIGINS="https://iwl.me" ollama serve

The environment variable OLLAMA_ORIGINS is required here so that I Write Like running in the browser could access the localhost address that ollama exposes with cross-origin requests.

3. Download Phi-3 using Ollama

Without terminating the Ollama server, open another Terminal session and type:

ollama pull phi3:mini

This will download the model.

4. Configure a custom model in I Write Like

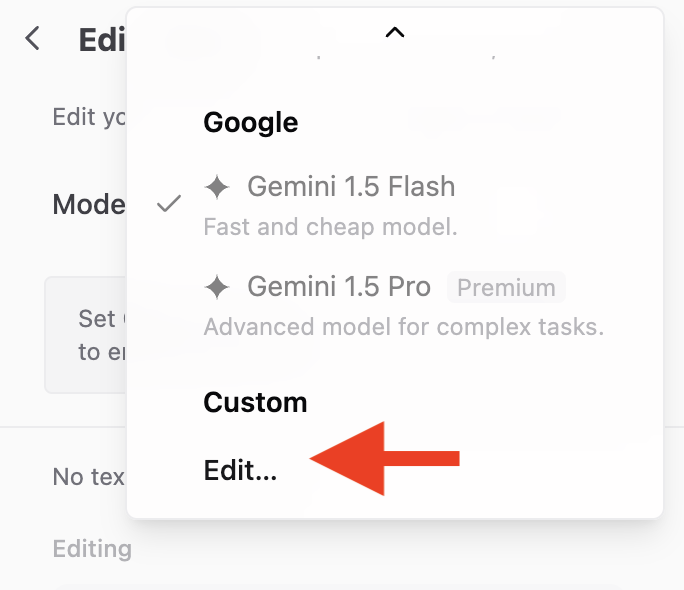

Open the Edit with AI tool, click on the Model selector, and click Edit… under Custom.

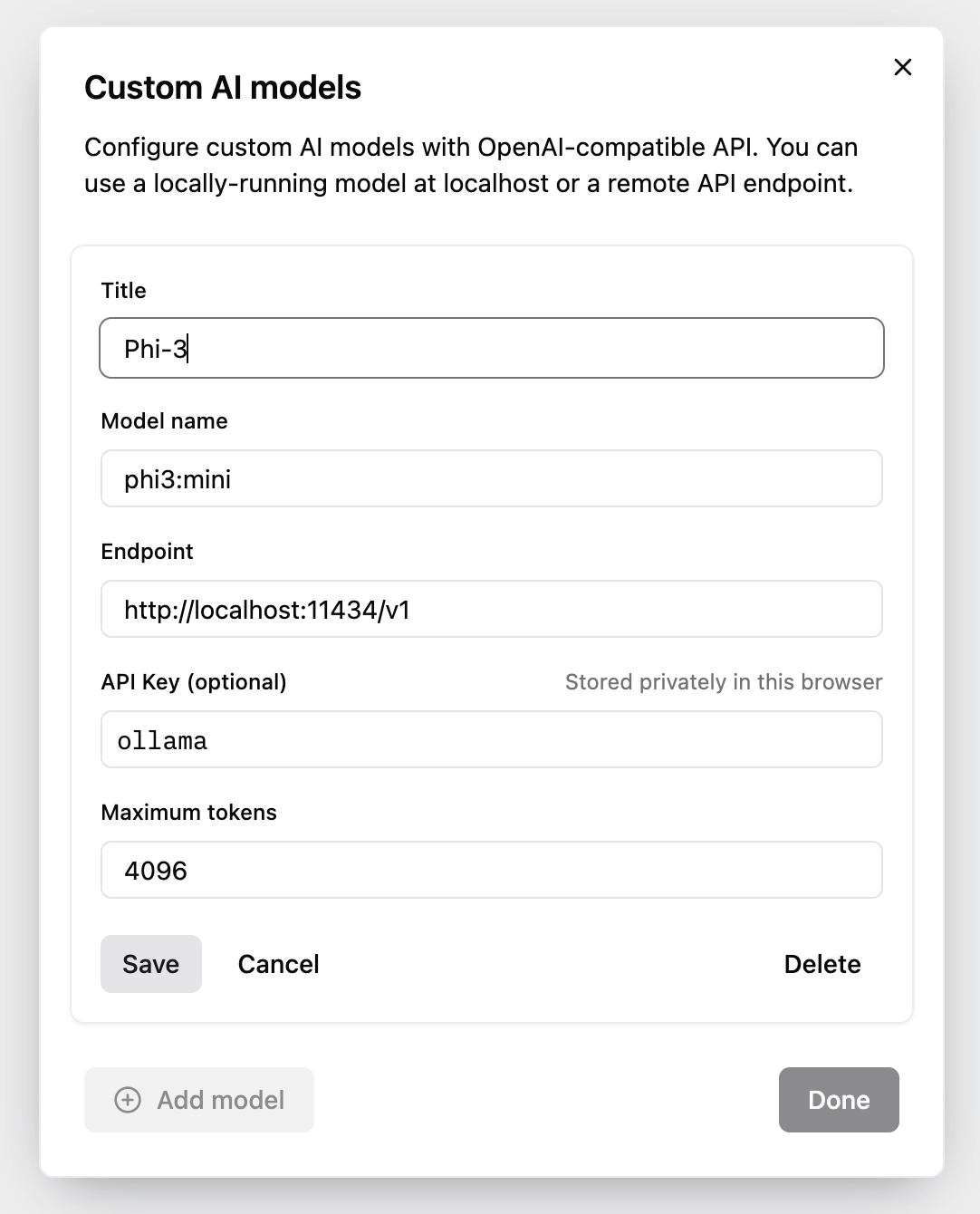

Click Add model and configure the model:

- Title: Phi-3 (this is for your reference)

- Model name:

phi3:mini - Endpoint:

http://localhost:11434/v1 - API key:

ollama - Maximum tokens: 4096

Click Save and Done.

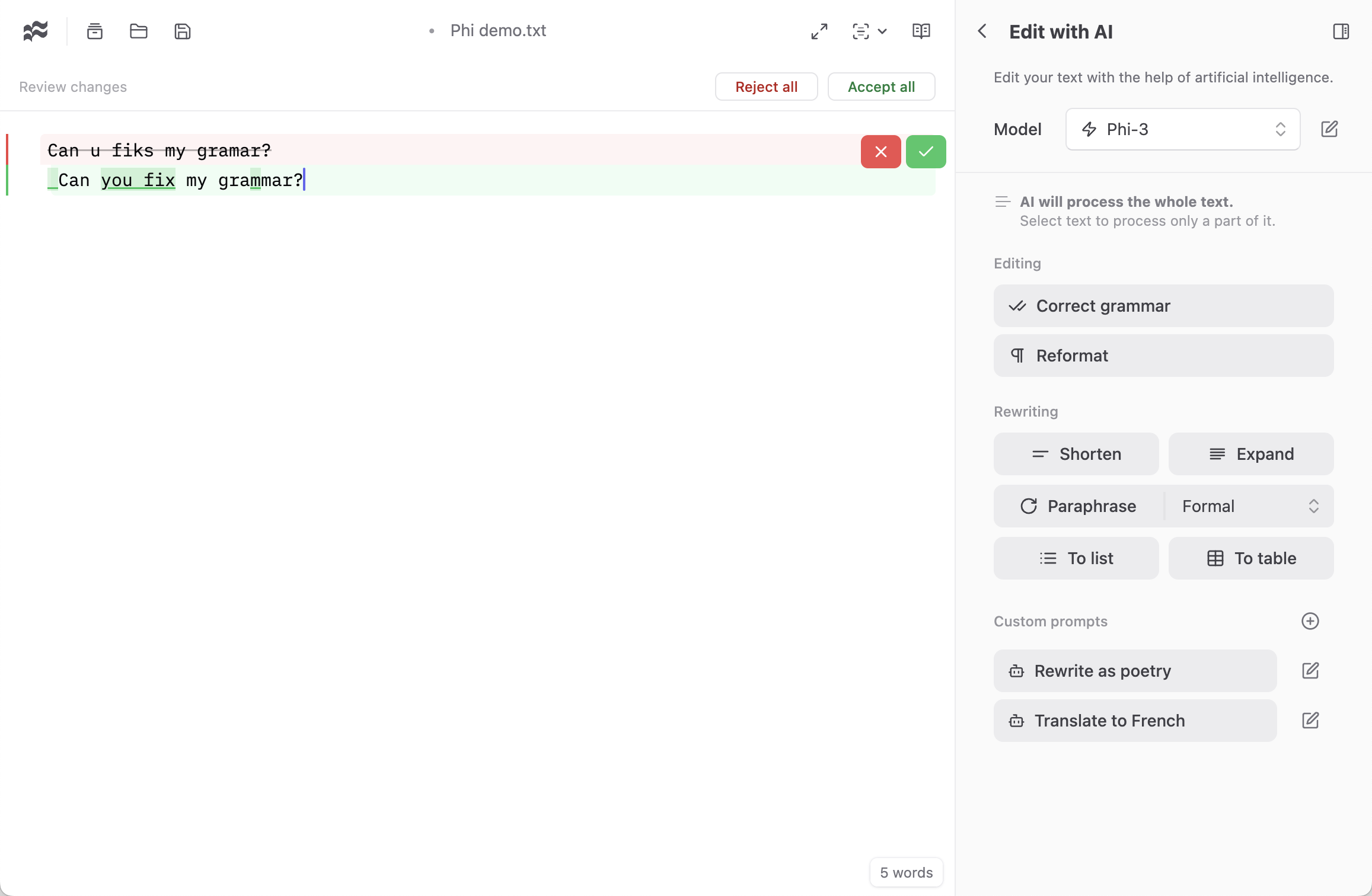

That’s it! Select the newly added model and try it on some text.

Phi-3 is a small but capable model that will run on most devices even with a lower amount of memory. If you want a more capable model, try llama3.